Scenarios

Diverse Scenarios

Over 40 diverse scenarios in urban, suburban, rural and highway environments

Two Novel Maps

Two novel digital-twin maps from Karlsruhe and Tübingen (Germany) for a more realistic environment

Diverse Road Users

First synthetic collective perception dataset including not only cars, vans, motorcyclists but also cyclists and pedestrians

Edge Case Scenarios

Edge cases like a roundabout or a tunnel sections to allow for a comprehensive evaluation

Environmental Conditions

Times of Day

Recordings on different times of day including night time

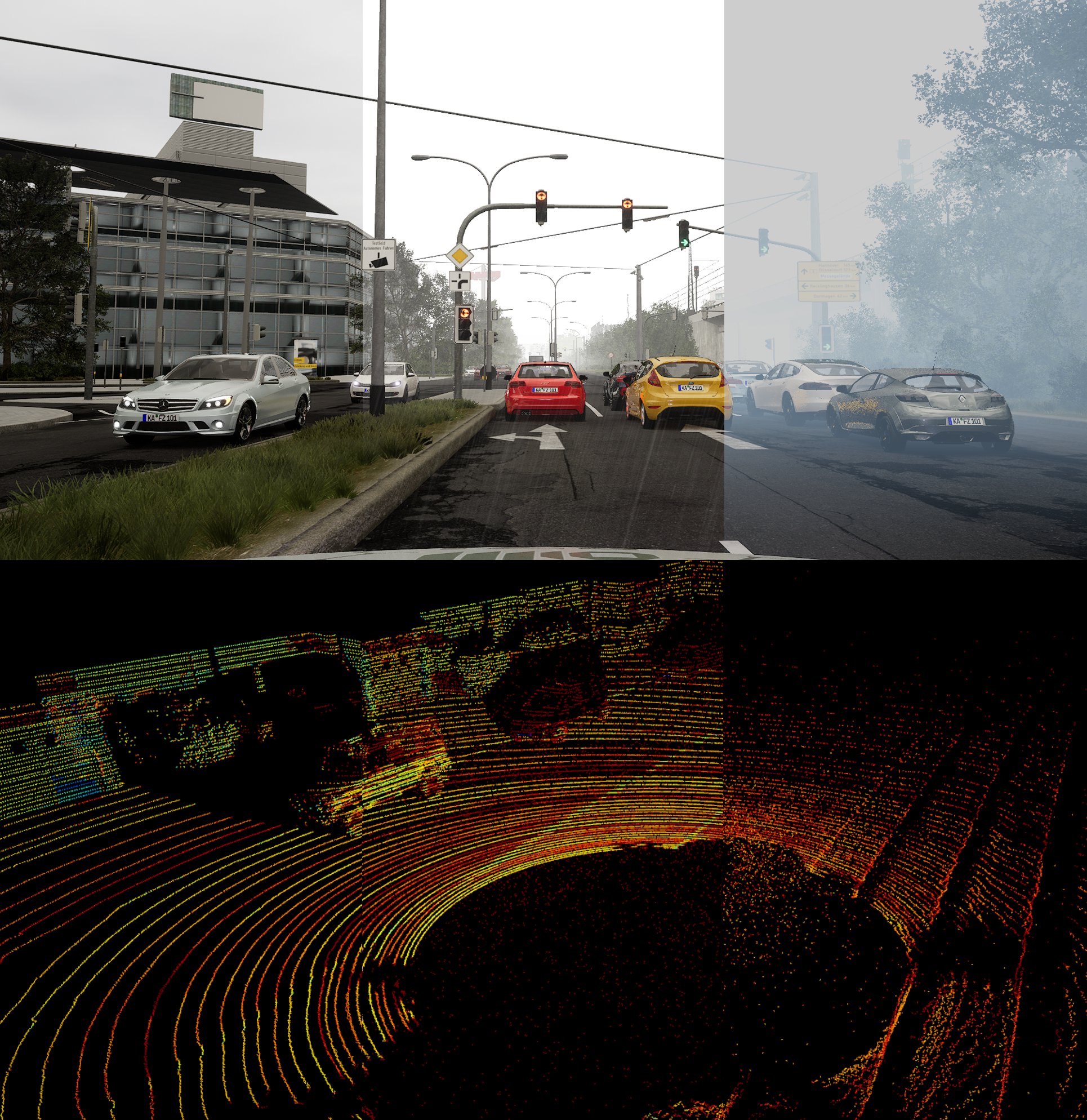

Weather Conditions

Three varying intensities for rain and fog to allow for an evaluation under adverse weather

Image Augmentation

Realistic and physically-accurate rendering of falling rain with raindrops on windshield and fog on image data

Point Cloud Augmentation

Realistic models to augment point clouds to simulate weather-affected LiDAR data

Sensor Suite

Sensor Carriers

The sensor suite is mounted on a varying number of connected and automated vehicles (CAVs) and Roadside Units (RSUs) per scenario

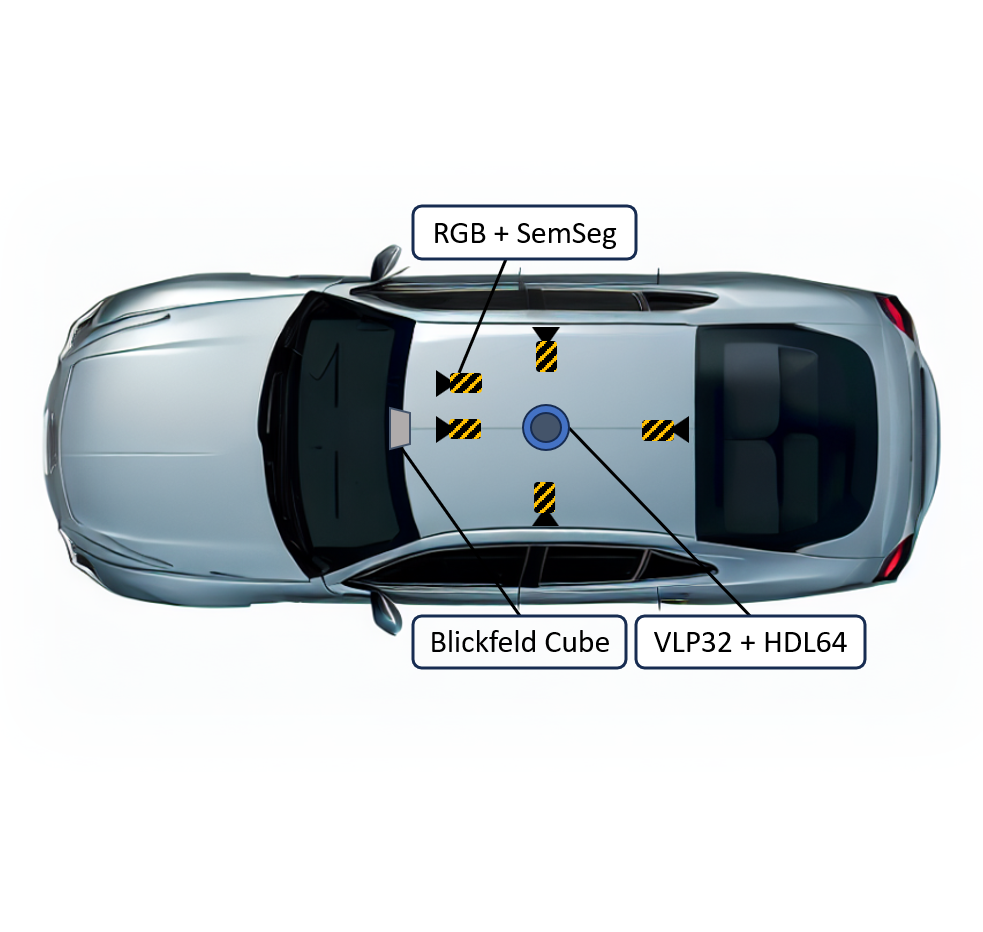

RGB Cameras

Five RGB cameras provide stereo images as well as a 360° surround view with a resolution of 1920x1080 px

Semantic Segmentation Cameras

Five semantic segmentation cameras with same specificataion as for the RGB cameras

32 and 64-layer 360° LiDARs

Realistic LiDAR sensor model for 32-layer and 64-layer 360° LiDAR (Velodyne VLP32 and HDL64)

Solid State LiDAR

First dataset integrating a solid state LiDAR (Blickfeld CUBE)

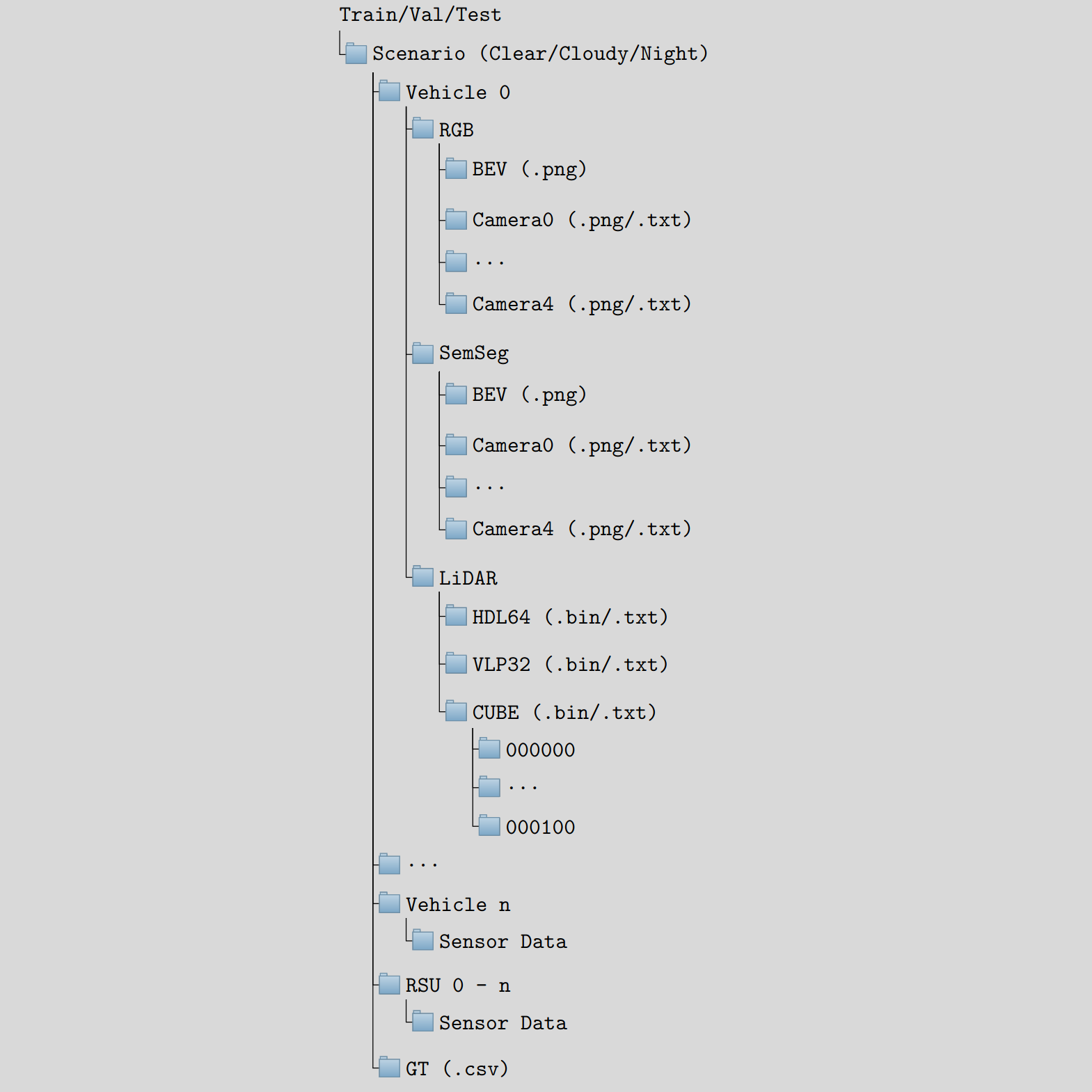

Data Structure

Seperate Environmental Conditions

Weather conditions are given seperately to allow for an independent evaluation

Data Hierarchy

Hierarchically structured sensor data and transformation matrices per vehicle per scenario

Coordinate Transforms

Easy coordinate transformation with already given transformation matrices for each vehicle and sensor

File Formats

Common file formats such as .bin, .txt, and .png for an easy and efficient data loading

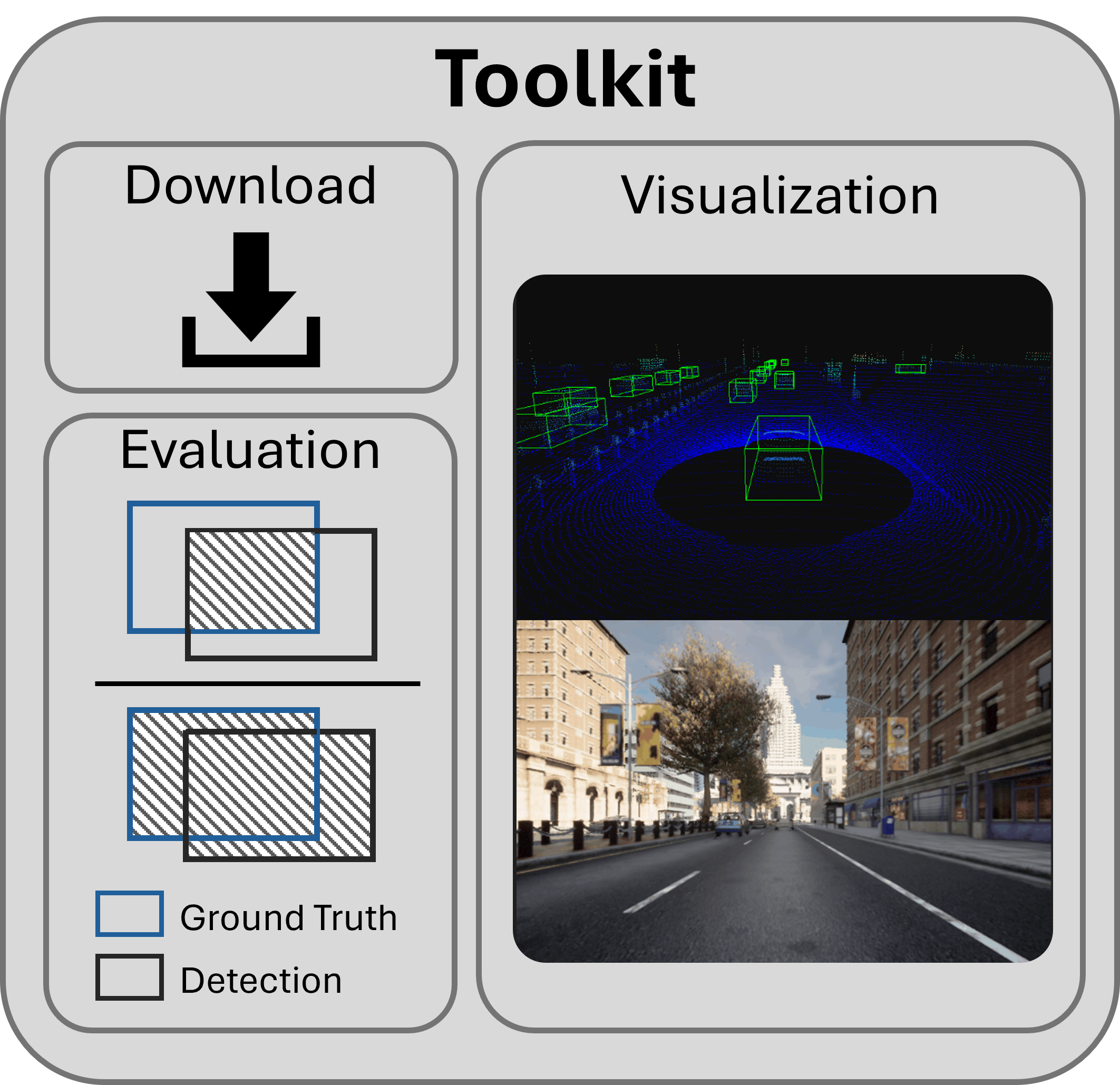

Toolkit

Installation

Super easy toolkit installation, just run: pip install scope-toolkit

Download

Efficient parallel downloading and extraction into the correct structure

Visualization

The visualization module allows for an easy exploration of the dataset

Data Loading

Provided dataset class for efficient training and testing in PyTorch

Evaluation

Evaluation module for easy and fair comparison of different methods using state-of-the-art metrics

FAQs

Frequently Asked Questions

Why should I use SCOPE?

SCOPE is the most comprehensive synthetic collective perception dataset. It is the first dataset with realistic LiDAR sensor models including a solid state LiDAR and weather simulations for camera and LiDAR. Moreover, in contrast to other publicly available datasets vulnerable road users are included.

How many Frames does SCOPE consist of?

SCOPE consists of 44 scenarios with a scenario length of 100 frames (10s) with four environmental conditions each. This results in a total of 17,600 frames.

What is the Train/Validation/Test Split?

SCOPE consists of 12,320 frames for training, 1,760 frames for validation and 3,520 frames for testing.

How many Sensors/Vehicles are present in the Dataset?

Vehicles and Roadside Untis are equipped with sensors. The scenarios contain up to 24 collaborative agents (20 CAVs + 4 RSUs) and up to 60 further road users as passive traffic.

Who created SCOPE?

SCOPE was created by Jörg Gamerdinger and Sven Teufel (PhD students @ University of Tübingen), assistent by Stephan Amann, Jan-Patrick Kirchner and Süleyman Simsek (BSc./MSc. students). The work was supervised by Prof. Oliver Bringmann (Head of Embedded Systems Group, University of Tübingen)

How to cite this Work?

@INPROCEEDINGS{SCOPE-Dataset, author={Gamerdinger, Jörg and Teufel, Sven and Schulz, Patrick and Amann, Stephan and Kirchner, Jan-Patrick and Bringmann, Oliver}, booktitle={2024 IEEE 27th International Conference on Intelligent Transportation Systems (ITSC)}, title={SCOPE: A Synthetic Multi-Modal Dataset for Collective Perception Including Physical-Correct Weather Conditions}, year={2024}, pages={1-8} }